Networking with Data Gravity

Digital Realty’s Data Gravity Index report estimates that by 2024, the G2000 Enterprises across 53 metros are expected to create 1.4 million gigabytes per second, process an additional 30 petaflops and store an additional 622 terabytes per second. This will certainly amplify data gravity. Ciena’s Ryan Perera explains the data gravity trend, and how network operators can adapt to the dynamic universe of digital transformation, while building a balanced system. This article was originally published on ET Telecom.

Data is being created everywhere, in and around our homes, offices, factories and machines. And, as enterprises pursue digital transformation and continue to evolve toward Industry 4.0, data growth will be further driven by the use of Digital Twins strategies using connected Internet of Things (IoT), cognitive services and cloud computing services. New, emerging applications like the Metaverse will also drive growth and put more pressure on our underlying communication networks. In fact, Credit Suisse1 estimates that the increasing interest in the Metaverse’s immersive applications and 3D environment will require telecom access networks to support 24 times more data usage in the next ten years that must be delivered reliably, cost-effectively and with lower latency.

With exabytes of data being created daily, data lakes are being used by enterprises and public cloud providers to process, store and transform data to bring insights and improve consumer experiences. These large bodies of data are now becoming Centers of [Data] Gravity2 for enterprise systems, bringing other data and applications close, similar to the effect that gravity has on objects around a planet. As the (data) mass increases, so does the strength of (data) gravitational pull. In the past, data centers were built closer to locations optimal for space and power. Now, the storage-oriented ‘data lakes’ are being built closer to end users, and these data lakes with CPU/GPU power are pulling applications and workloads toward them.

The Effect of Data Gravity

Digital Realty’s Data Gravity Index3 report estimates that by 2024, the G2000 Enterprises across 53 metros are expected to create 1.4 million gigabytes per second, process an additional 30 petaflops and store an additional 622 terabytes per second. This will certainly amplify data gravity. Data Gravity Intensity4, which is determined by data mass, level of data activity, bandwidth and, of course, latency, is expected to see a 153% CAGR in the Asia Pacific region, with certain metros having larger attraction.

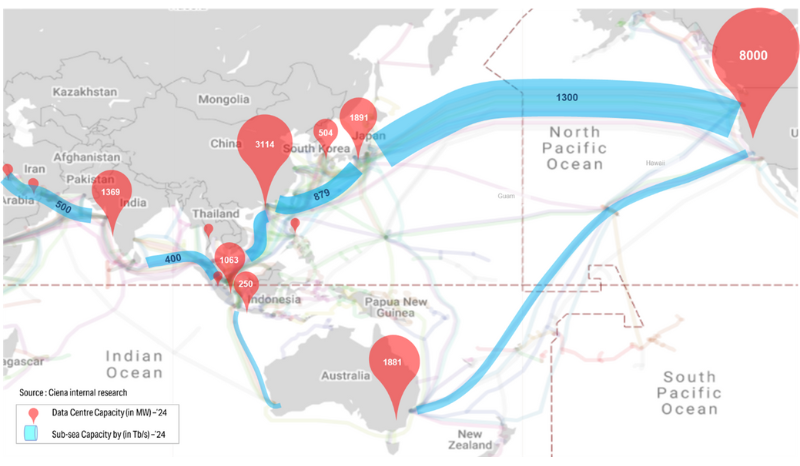

Figure 1: Data Gravity Centers in Asia

Data Gravity Intensity in Asia Pacific is mostly where large public data center regions are located. These centers (red color bubbles with capacity in megawatts shown in Figure 1) are being well served by both terrestrial and submarine networks (blue cylinders with capacity in terabits/s). Additionally, more than 17 new open-line submarine cable systems are expected to be commissioned between 2023 and 2025 to interconnect these regions with the lowest latency and highest spectral efficiencies. Leading regional telecom providers are partnering with public cloud providers to build these new submarine network corridors.

Given the ever-increasing gravitational pull of these data clusters, we expect the clusters to further grow, while pulling other smaller clusters to be built closer. As can be seen in Figure 1, the high-intensity data gravity sites are mostly in highly populated urban metros. To mitigate power and space limitations, we see these data centers growing in cluster fashion over optical WAN mesh underlay networks, including campus-type data center clusters. Gone are the days when hyper-mega data centers are built in remote locations around the world.

Data gravity can, however, create unforeseen challenges to digital transformation when factoring business locations, proximity to users (latency), bandwidth (availability and cost), regulatory constraints, compliance and data privacy. Public clouds, with their vast portfolio of services, have long been seen as the obvious destination to which enterprises move all their workloads. But, given the egress costs, data security, overdependency and disaster recovery concerns, the majority of enterprises are now pursuing hybrid multi-cloud strategies while trying to navigate data gravity barriers.

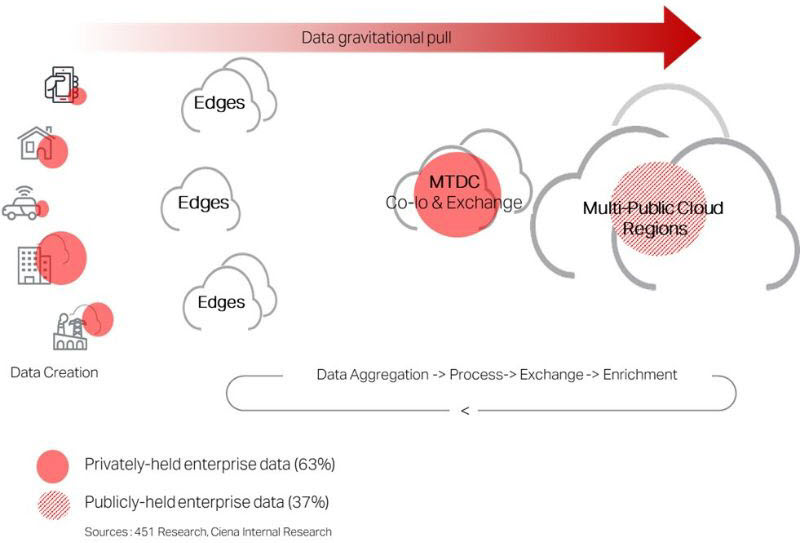

Figure 2: Data Creation Cycle & Data Gravitational Pull

Navigating Data Gravity Barriers

To address the challenges of data gravity, enterprises are fast adopting neutral co-location sites (centers of data exchange) to store data with low-latency connectivity to both public and on-premise clouds. In fact, 451 Research5 found that 63% of enterprises still own and operate data center facilities and many expect to leverage third-party/colocation sites such as Multi-Tenant Data Centers (MTDCs) with access to multi-cloud and other ecosystems, while navigating disaster recovery and data gravity barriers.

The distributed infrastructure of computing, network and storage will increasingly involve specialized resources such as chipsets for artificial intelligence (AI) training and inference versus general-purpose applications. Furthermore, edge cloud systems would be of limited scale given the space and power constraints. Thus, to avoid stranding resources, the industry has identified the need for a Balanced System6. This points toward achieving optimal use of the distributed computing, storage and network connectivity resources. Additionally, a declarative programming model is required to achieve this balanced system and to tightly couple the application context with the infrastructure state. Furthermore, in an Application Driven Networking paradigm, applications care about completion times for Remote Procedure Call (RPC) sessions between compute nodes, and not just about connection latencies. This ecosystem of network operators, such as public cloud providers, MTDCs and telecom service providers, must participate in this paradigm with a scalable and programmable network infrastructure along with exposing the relevant APIs to the application providers.

How Can the Industry Adapt?

In an era of distributed Centers of [Data] Gravity, MTDCs will play a vital role. MTDCs will serve as co-location & data exchange points with high capacity & low latency interconnections to the public clouds, mitigating the data gravity barriers for enterprises.

Additionally, in a distributed cloud computing environment, a Balanced System is required more than ever before, with tighter coupling between the application context and network state. Network providers and the vendor ecosystem have a key role to play in building adaptive networks that are scalable and programmable with the relevant API exposure to application providers.

Disclaimer: All views, opinions and data expressed here are solely by the author and for general information only. The views do not make representation or warranty of any kind, express or implied, regarding the accuracy, adequacy, validity, availability, reliability, or completeness of any information in this blog.

References

- https://www.credit-suisse.com/media/assets/corporate/docs/about-us/media/media-release/2022/03/metaverse-14032022.pdf

- https://datacentremagazine.com/technology-and-ai/what-data-gravity

- https://www.digitalrealty.asia/platform-digital/data-gravity-index

- https://futurecio.tech/understanding-data-gravity-intensity-traps-and-opportunities-in-2021/

- https://go.451research.com/2020-mi-trends-driving-multi-tenant-datacenter-service-industry.html

- https://www.youtube.com/watch?v=Am_itCzkaE0